1. What’s new

1.1. What’s new in Apache Unomi 2.0

Apache Unomi 2 is a new release focused on improving core functionalities and robustness of the product.

The introduction of tighter data validation with JSON Schemas required some changes in the product data model, which presented an opportunity for noticeable improvements in the overall performance.

This new release also introduces a first (beta) version of the Unomi GraphQL API.

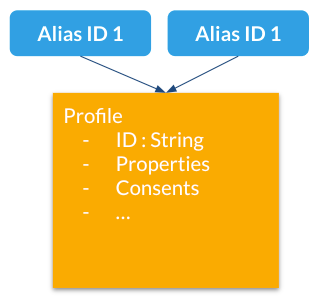

1.1.1. Introducing profiles aliases

Profiles may now have alias IDs, which is a new way to reference profiles using multiple IDs. The Unomi ID still exists, but a new index with aliases can reference a single Unomi profile. This enables more flexible integrations with external systems, as well as provide more flexible and reliable merging mechanisms. A new REST API makes it easy to define, update and remove aliases for profiles. You can read more about profile aliases here.

1.1.2. Scopes declarations are now required

Scopes declarations are now required in Unomi 2. When submitting an event and specifying a scope, that scope must already be declared on the platform.

Scopes can be easily created via the corresponding REST API (cxs/scopes)

For example, an "apache" scope can be created using the following API call.

curl --location --request POST 'http://localhost:8181/cxs/scopes' \

-u 'karaf:karaf' \

--header 'Content-Type: application/json' \

--data-raw '{

"itemId": "apache",

"itemType": "scope"

}'1.1.3. JSON Schemas

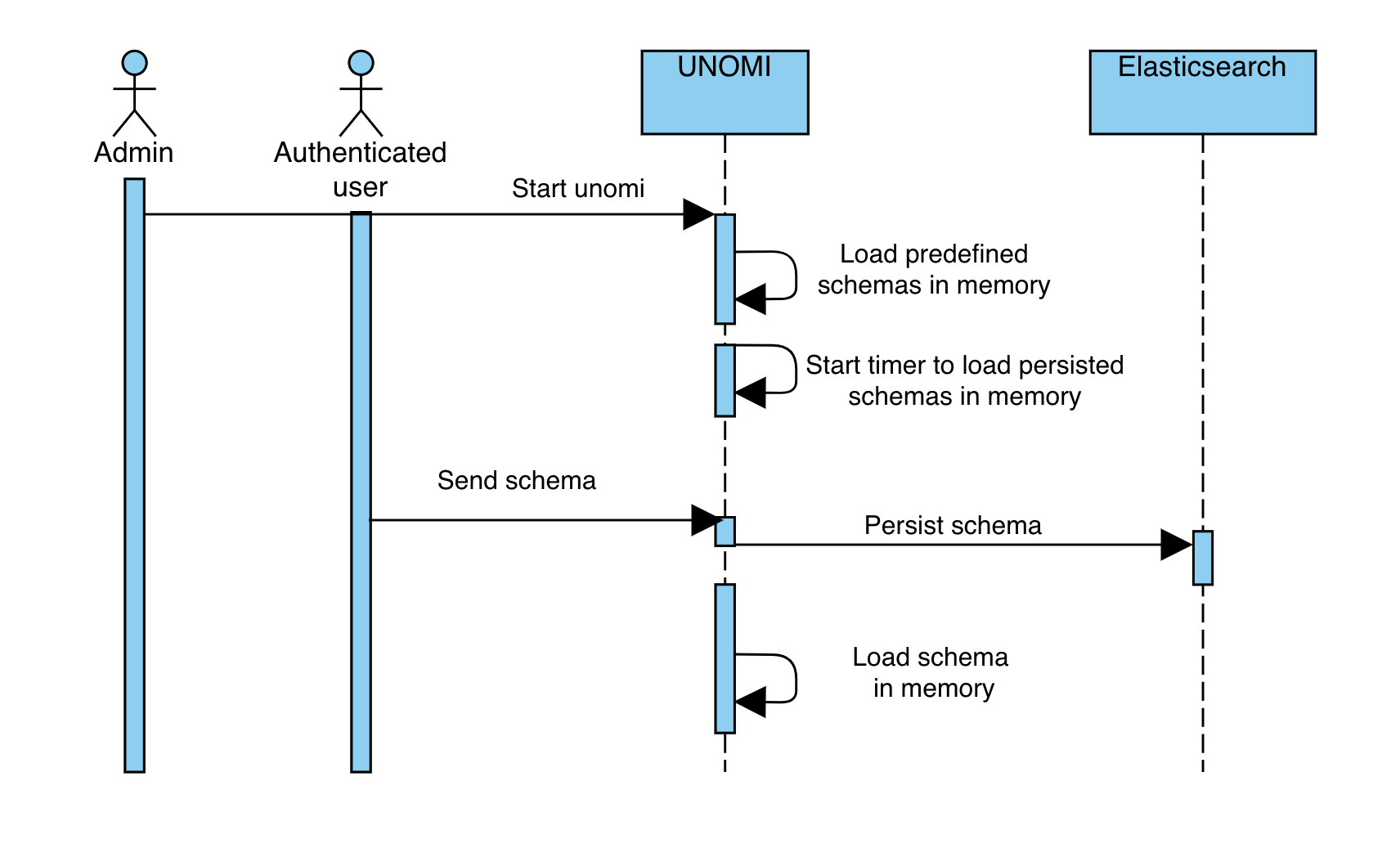

Apache Unomi 2 introduces support for JSON Schema for all of its publicly exposed endpoints. Data received by Apache Unomi 2 will first be validated against a known schema to make sure it complies with an expected payload. If the received payload does not match a known schema, it will be rejected by Apache Unomi 2.

Apache Unomi 2 also introduces a set of administrative endpoints allowing new schemas and/or schemas extensions to be registered.

More details about JSON Schemas implementation are available in the corresponding section of the documentation.

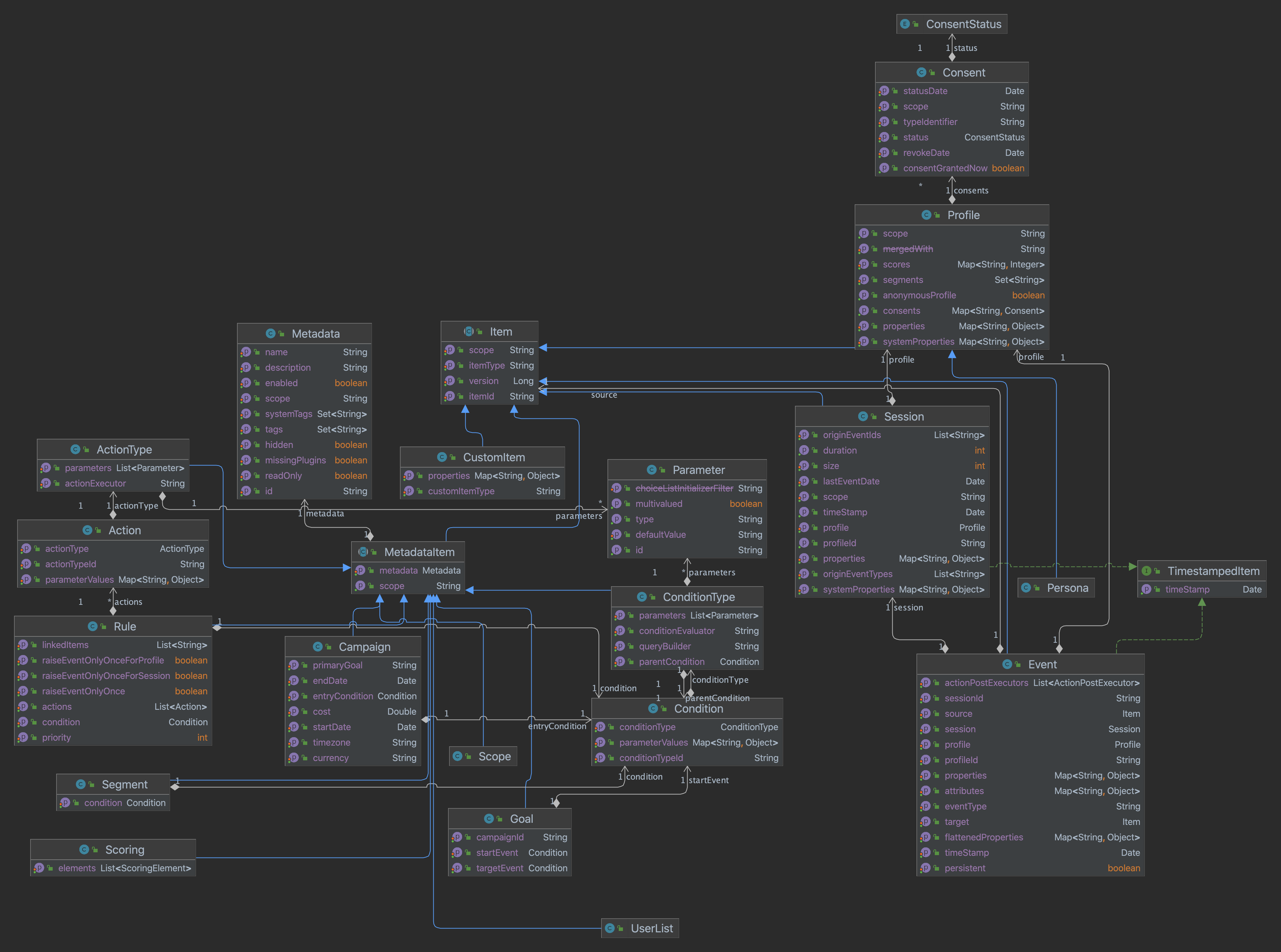

1.1.4. Updated data model

The introduction of JSON schema required us to modify Apache Unomi data model, One of the key differences is the removal of open maps.

The properties field in the events objects provided by unomi are now restricted by JSON schema. This means object properties must be declared in a JSON schema for an event to be accepted.

A new property, flattenedProperties has been introduced to the event object, this property has been added to store the properties as flattened in Elasticsearch and should avoid mapping explosion for dynamic properties.

If there is dynamic properties that you want to send with your event, you should use the flattenedProperties field of the event.

It’s also necessary to specify the format of the values which are added to flattenedProperties by JSON schema but these value will be stored as flattened and will not create dynamic mapping contrary to the properties field of the events.

Here is an example for objects that used dynamic properties for URL parameters:

The following event example in Apache Unomi 1.x:

{

"eventType":"view",

"scope":"digitall",

"properties":{

"URLParameters":{

"utm_source":"source"

}

},

"target":{

"scope":"digitall",

"itemId":"30c0a9e3-4330-417d-9c66-4c1beec85f08",

"itemType":"page",

"properties":{

"pageInfo":{

"pageID":"30c0a9e3-4330-417d-9c66-4c1beec85f08",

"nodeType":"jnt:page",

"pageName":"Home",

...

},

"attributes":{},

"consentTypes":[]

}

},

"source":{

"scope":"digitall",

"itemId":"ff5886e0-d75a-4061-9de9-d90dfc9e18d8",

"itemType":"site"

}

}Is replaced by the following in Apache Unomi 2.x:

{

"eventType":"view",

"scope":"digitall",

"flattenedProperties":{

"URLParameters":{

"utm_source":"source"

}

},

"target":{

"scope":"digitall",

"itemId":"30c0a9e3-4330-417d-9c66-4c1beec85f08",

"itemType":"page",

"properties":{

"pageInfo":{

"pageID":"30c0a9e3-4330-417d-9c66-4c1beec85f08",

"nodeType":"jnt:page",

"pageName":"Home",

...

},

"attributes":{},

"consentTypes":[]

}

},

"source":{

"scope":"digitall",

"itemId":"ff5886e0-d75a-4061-9de9-d90dfc9e18d8",

"itemType":"site"

}

}If using the default Apache 1.x data model, our Unomi 2 migration process will handle the data model changes for you.

If you are using custom events/objects, please refer to the detailed migration guide for more details.

1.1.5. New Web Tracker

Apache Unomi 2.0 Web Tracker, located in extensions/web-tracker/ has been completely rewritten. It is no longer based on an external library and is fully self-sufficient. It is based on an external contribution that has been used in production on many sites.

You can find more information about the new web tracker here.

1.1.6. GraphQL API - beta

Apache Unomi 2.0 sees the introduction of a new (beta) GraphQL API. Available behind a feature flag (the API disabled by default), the GraphQL API is available for you to play with.

More details about how to enable/disable the GraphQL API are available in the corresponding section of the documentation.

We welcome tickets/PRs to improve its robustness and progressively make it ready for prime time.

1.1.7. Migrate from Unomi 1.x

To facilitate migration we prepared a set of scripts that will automatically handle the migration of your data from Apache Unomi 1.5+ to Apache Unomi 2.0.

It is worth keeping in mind that for Apache Unomi 2.0 we do not support “hot” migration, the migration process will require a shutdown of your cluster to guarantee that no new events will be collected while data migration is in progress.

Special caution must be taken if you declared custom events as our migration scripts can only handle objects we know of. More details about migration (incl. of custom events) is available in the corresponding section corresponding section of the documentation.

1.1.8. Elasticsearch compatibility

We currently recommend using Elasticsearch 7.17.5 with Apache Unomi 2.0, this ensure you are on a recent version that is not impacted by the log4j vulnerabilities (fixed in Elasticsearch 7.16.3).

This version increase is releated to Apache Unomi 2.0 makeing use of a new Elasticsearch field type called Flattened, and although it was available in prior versions of Elasticsearch, we do not recommend using those due to the above-mentioned log4j vulnerabilities.

2. Discover Unomi

2.1. Quick start with Docker

Begin by creating a docker-compose.yml file with the following content:

version: '3.8'

services:

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:7.17.5

environment:

- discovery.type=single-node

ports:

- 9200:9200

unomi:

# Unomi version can be updated based on your needs

image: apache/unomi:2.0.0

environment:

- UNOMI_ELASTICSEARCH_ADDRESSES=elasticsearch:9200

- UNOMI_THIRDPARTY_PROVIDER1_IPADDRESSES=0.0.0.0/0,::1,127.0.0.1

ports:

- 8181:8181

- 9443:9443

- 8102:8102

links:

- elasticsearch

depends_on:

- elasticsearchFrom the same folder, start the environment using docker-compose up and wait for the startup to complete.

Try accessing https://localhost:9443/cxs/cluster with username/password: karaf/karaf . You might get a certificate warning in your browser, just accept it despite the warning it is safe.

2.2. Quick Start manually

1) Install JDK 11 (https://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html) and make sure you set the JAVA_HOME variable https://docs.oracle.com/cd/E19182-01/820-7851/inst_cli_jdk_javahome_t/ (see our Getting Started guide for more information on JDK compatibility)

2) Download ElasticSearch here : https://www.elastic.co/downloads/past-releases/elasticsearch-7-17-5 (please <strong>make sure</strong> you use the proper version : 7.17.5)

3) Uncompress it and change the config/elasticsearch.yml to include the following config : <code>cluster.name: contextElasticSearch</code>

4) Launch ElasticSearch using : bin/elasticsearch

5) Download Apache Unomi here : https://unomi.apache.org/download.html

6) Start it using : ./bin/karaf

7) Start the Apache Unomi packages using unomi:start in the Apache Karaf Shell

8) Wait for startup to complete

9) Try accessing https://localhost:9443/cxs/cluster with username/password: karaf/karaf . You might get a certificate warning in your browser, just accept it despite the warning it is safe.

10) Request your first context by simply accessing : http://localhost:8181/cxs/context.js?sessionId=1234

11) If something goes wrong, you should check the logs in ./data/log/karaf.log. If you get errors on ElasticSearch,

make sure you are using the proper version.

Next steps:

-

Trying our integration samples page

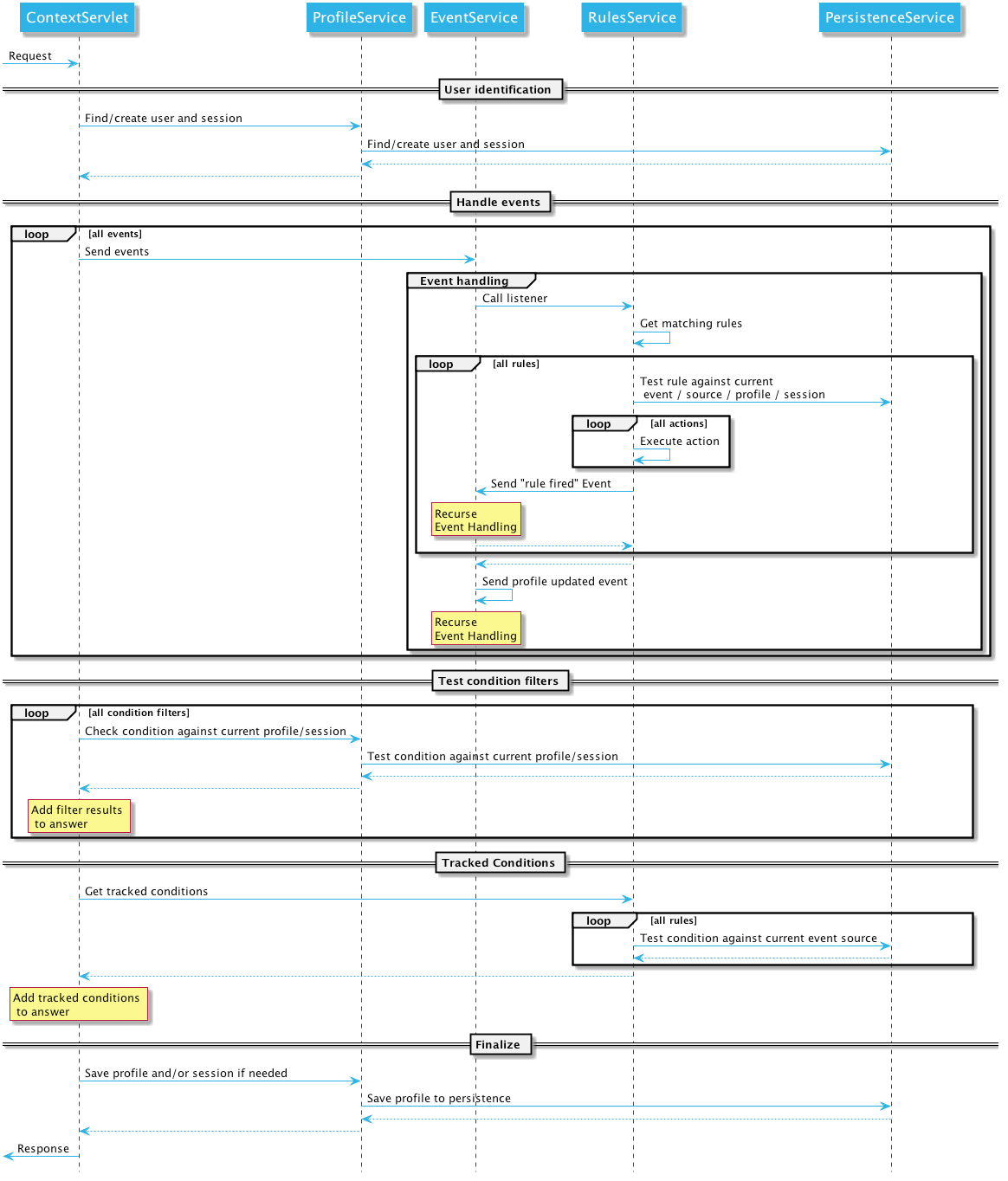

2.3. Getting started with Unomi

We will first get you up and running with an example. We will then lift the corner of the cover somewhat and explain in greater details what just happened.

2.3.1. Prerequisites

This document assumes working knowledge of git to be able to retrieve the code for Unomi and the example. Additionally, you will require a working Java 11 or above install. Refer to http://www.oracle.com/technetwork/java/javase/ for details on how to download and install Java SE 11 or greater.

JDK compatibility

Starting with Java 9, Oracle made some big changes to the Java platform releases. This is why Apache Unomi is focused on supporting the Long Term Supported versions of the JDK, currently version 11. We do not test with intermediate versions so they may or may not work properly. Currently the most tested version is version 11.

Also, as there are new licensing restrictions on JDKs provided by Oracle for production usages, Apache Unomi has also added support for OpenJDK builds. Other JDK distributions might also work but are not regularly tested so you should use them at your own risks.

ElasticSearch compatibility

Starting with version 2.0.0 Apache Unomi adds compatibility with ElasticSearch 7.17.5 . It is highly recommended to use the ElasticSearch version specified in the documentation whenever possible. If in doubt, don’t hesitate to check with the Apache Unomi community to get the latest information about ElasticSearch version compatibility.

2.3.2. Running Unomi

Start Unomi

Start Unomi according to the quick start with docker or by compiling using the building instructions. Once you have Karaf running, you should wait until you see the following messages on the Karaf console:

Initializing user list service endpoint...

Initializing geonames service endpoint...

Initializing segment service endpoint...

Initializing scoring service endpoint...

Initializing campaigns service endpoint...

Initializing rule service endpoint...

Initializing profile service endpoint...

Initializing cluster service endpoint...This indicates that all the Unomi services are started and ready to react to requests. You can then open a browser and go to http://localhost:8181/cxs to see the list of

available RESTful services or retrieve an initial context at http://localhost:8181/cxs/context.json (which isn’t very useful at this point).

You can now find an introduction page at the following location: http://localhost:8181

Also now that your service is up and running you can go look at the request examples to learn basic requests you can do once your server is up and running.

2.4. Unomi web tracking tutorial

In this tutorial we will guide through the basic steps of getting started with a web tracking project. You will see how to integrate the built-in web tracker with an existing web site and what this enables.

If you prefer to use existing HTML and Javascript rather than building your own, all the code we feature in this tutorial is extracted from our tracker sample which is available here: https://github.com/apache/unomi/blob/master/extensions/web-tracker/wab/src/main/webapp/index.html . However you will still need to use the REST API calls to create the scope and rule to make it all work.

2.4.1. Installing the web tracker in a web page

Using the built-in tracker is pretty simple, simply add the following code to your HTML page :

<script type="text/javascript" src="/tracker/unomi-web-tracker.min.js"></script>or you can also use the non-minified version that is available here:

<script type="text/javascript" src="/tracker/unomi-web-tracker.js"></script>This will only load the tracker. To initialize it use a snipper like the following code:

<script type="text/javascript">

(function () {

const unomiTrackerTestConf = {

"scope": "unomi-tracker-test",

"site": {

"siteInfo": {

"siteID": "unomi-tracker-test"

}

},

"page": {

"pageInfo": {

"pageID": "unomi-tracker-test-page",

"pageName": document.title,

"pagePath": document.location.pathname,

"destinationURL": document.location.origin + document.location.pathname,

"language": "en",

"categories": [],

"tags": []

},

"attributes": {},

"consentTypes": []

},

"events:": [],

"wemInitConfig": {

"contextServerUrl": document.location.origin,

"timeoutInMilliseconds": "1500",

"contextServerCookieName": "context-profile-id",

"activateWem": true,

"trackerSessionIdCookieName": "unomi-tracker-test-session-id",

"trackerProfileIdCookieName": "unomi-tracker-test-profile-id"

}

}

// generate a new session

if (unomiWebTracker.getCookie(unomiTrackerTestConf.wemInitConfig.trackerSessionIdCookieName) == null) {

unomiWebTracker.setCookie(unomiTrackerTestConf.wemInitConfig.trackerSessionIdCookieName, unomiWebTracker.generateGuid(), 1);

}

// init tracker with our conf

unomiWebTracker.initTracker(unomiTrackerTestConf);

unomiWebTracker._registerCallback(() => {

console.log("Unomi tracker test successfully loaded context", unomiWebTracker.getLoadedContext());

}, 'Unomi tracker test callback example');

// start the tracker

unomiWebTracker.startTracker();

})();

</script>2.4.2. Creating a scope to collect the data

You might notice the scope used in the snippet. All events sent to Unomi must be associated with a scope, that must have been created before events are accepted. So in order to make sure the events are collected with the above Javascript code, we must create a scope with the following request.

curl --location --request POST 'http://localhost:8181/cxs/scopes' \

--header 'Authorization: Basic a2FyYWY6a2FyYWY=' \

--header 'Content-Type: application/json' \

--data-raw '{

"itemId": "unomi-tracker-test",

"metadata": {

"id": "unomi-tracker-test",

"name": "Unomi tracker Test Scope"

}

}'The authorization is the default username/password for the REST API, which is karaf:karaf and you that should definitely be changed as soon as possible by modifying the etc/users.properties file.

2.4.3. Using tracker in your own JavaScript projects

The tracker also exists as an NPM library that you can integrate with your own Javascript projects. You can find the library here:

https://www.npmjs.com/package/apache-unomi-tracker

Here’s an example on how to use it:

yarn add apache-unomi-trackerYou can then simply use it in your JS code using something like this:

import {useTracker} from "apache-unomi-tracker";

(function () {

const unomiWebTracker = useTracker();

const unomiTrackerTestConf = {

"scope": "unomi-tracker-test",

"site": {

"siteInfo": {

"siteID": "unomi-tracker-test"

}

},

"page": {

"pageInfo": {

"pageID": "unomi-tracker-test-page",

"pageName": document.title,

"pagePath": document.location.pathname,

"destinationURL": document.location.origin + document.location.pathname,

"language": "en",

"categories": [],

"tags": []

},

"attributes": {},

"consentTypes": []

},

"events:": [],

"wemInitConfig": {

"contextServerUrl": document.location.origin,

"timeoutInMilliseconds": "1500",

"contextServerCookieName": "context-profile-id",

"activateWem": true,

"trackerSessionIdCookieName": "unomi-tracker-test-session-id",

"trackerProfileIdCookieName": "unomi-tracker-test-profile-id"

}

}

// generate a new session

if (unomiWebTracker.getCookie(unomiTrackerTestConf.wemInitConfig.trackerSessionIdCookieName) == null) {

unomiWebTracker.setCookie(unomiTrackerTestConf.wemInitConfig.trackerSessionIdCookieName, unomiWebTracker.generateGuid(), 1);

}

// init tracker with our conf

unomiWebTracker.initTracker(unomiTrackerTestConf);

unomiWebTracker._registerCallback(() => {

console.log("Unomi tracker test successfully loaded context", unomiWebTracker.getLoadedContext());

}, 'Unomi tracker test callback example');

// start the tracker

unomiWebTracker.startTracker();

})();2.4.4. Viewing collected events

There are multiple ways to view the events that were received. For example, you could use the following cURL request:

curl --location --request POST 'http://localhost:8181/cxs/events/search' \

--header 'Authorization: Basic a2FyYWY6a2FyYWY=' \

--header 'Content-Type: application/json' \

--data-raw '{

"sortby" : "timeStamp:desc",

"condition" : {

"type" : "matchAllCondition"

}

}'Another (powerful) way to look at events is to use the SSH Console. You can connect to it with the following shell command:

ssh -p 8102 karaf@localhostUsing the same username password (karaf:karaf) and then you can use command such as :

-

event-tailto view in realtime the events as they come in (CTRL+C to stop) -

event-listto view the latest events -

event-view EVENT_IDto view the details of a specific event

2.4.5. Viewing the current profile

By default, Unomi uses a cookie called context-profile-id to keep track of the current profile. You can use this the value of this cookie which contains a UUID to lookup the details of the profile. For example with the SSH console you can simply to:

profile-view PROFILE_UUID

Which will print out the details of the profile with the associated ID.

Another interesting command is profile-list to list all the recently modified profiles

You could also retrieve the profile details using the REST API by using a request such as this one:

curl --location --request GET 'http://localhost:8181/cxs/profiles/PROFILE_UUID' \

--header 'Authorization: Basic a2FyYWY6a2FyYWY=' \2.4.6. Adding a rule

Rules are a powerful ways to react in real-time to incoming events. For example a rule could update a profile when a certain event comes in, either copying values from the event or performing some kind of computation when the event occurs, including accessing remote systems such as a Salesforce CRM (see the Salesforce connector sample).

In this example we will simply setup a basic rule that will react to the view event and set a property in the current profile.

curl --location --request POST 'http://localhost:8181/cxs/rules' \

--header 'Authorization: Basic a2FyYWY6a2FyYWY=' \

--header 'Content-Type: application/json' \

--data-raw '{

"metadata": {

"id": "viewEventRule",

"name": "View event rule",

"description": "Increments a property on a profile to indicate that this rule executed successfully when a view event occurs"

},

"condition": {

"type": "eventTypeCondition",

"parameterValues": {

"eventTypeId": "view"

}

},

"actions": [

{

"type": "incrementPropertyAction",

"parameterValues": {

"propertyName": "pageViewCount"

}

}

]

}'The above rule will execute when a view event is received (which is automatically sent by the tracker when a page is loaded) and increments a property called pageViewCount on the user’s profile.

You can then reload then page and check with the profile-view PROFILE_UUID SSH command that the profile was updated with the new property and that it is incremented on each page reload.

You can also use the rule-list command to display all the rules in the system and the rule-tail to watch in real-time which rules are executed. The rule-view RULE_ID command will let you view the contents of a rule.

2.4.7. Adding personalization

The last step is to use the newly added property to the profile to perform some page personalization. In order to do that we will use the tracker’s API to register a personalization that will be using a condition that checks if the pageViewCount is higher than 5. If it has, variant1 will be displayed, otherwise the fallback variant variant2 will be used instead.

variants = {

"var1" : {

content : "variant1",

},

"var2" : {

content : "variant2",

}

}

unomiWebTracker.registerPersonalizationObject({

"id": "testPersonalization",

"strategy": "matching-first",

"strategyOptions": {"fallback": "var2"},

"contents": [{

"id": "var1",

"filters": [{

"condition": {

"type": "profilePropertyCondition",

"parameterValues": {

"propertyName" : "properties.pageViewCount.<scope>",

"comparisonOperator" : "greaterThan",

"propertyValueInteger" : 5

}

}

}]

}, {

"id": "var2"

}]

}, variants, false, function (successfulFilters, selectedFilter) {

if (selectedFilter) {

document.getElementById(selectedFilter.content).style.display = '';

}

});As you can see in the above code snippet, a variants array is created with two objects that associated personalization IDs with content IDs. Then we build the personalization object that contains the two IDs and their associated conditions (only a condition on var1 is passed in this case) as well as an option to indicate which is the fallback variant in case no conditions are matched.

The HTML part of this example looks like this:

<div id="variant1" style="display: none">

You have already seen this page 5 times

</div>

<div id="variant2" style="display: none">

Welcome. Please reload this page 5 times until it triggers the personalization change

</div>As you can see we hide the variants by default so that there is no "flashing" effect and then use the callback function to display to variant resolve by Unomi’s personalization engine.

2.4.8. Conclusion

What have we achieved so far ?

-

Installed a tracker in a web page

-

Created a scope in which to collect the data

-

Learned how to use the tracker as an NPM library

-

How to view the collected events

-

How to view the current visitor profile

-

How to add a rule to update a profile property

-

How to personalize a web page’s content based on the property updated by the rule

Of course this tutorial is just one example of what could be achieved, and hasn’t even yet introduced more advanced notions such as profile segmentation or Groovy action scripting. The system is capable of much more, for example by directly using its actions to integrate with third-party systems (CRM, social networks, etc..)

2.4.9. Next steps

-

Learn more about the web tracker, custom events, API, …

-

Learn more about segmentation

-

View some more samples

-

Continue reading Unomi’s user manual to see all that is possible with this technology

3. Apache Unomi Recipes and requests

3.1. Recipes

3.1.1. Introduction

In this section of the documentation we provide quick recipes focused on helping you achieve a specific result with Apache Unomi.

3.1.2. Enabling debug mode

Although the examples provided in this documentation are correct (they will work "as-is"), you might be tempted to modify them to fit your use case, which might result in errors.

The best approach during development is to enable Apache Unomi debug mode, which will provide you with more detailed logs about events processing.

The debug mode can be activated via the karaf SSH console (default credentials are karaf/karaf):

ubuntu@ip-10-0-3-252:~/$ ssh -p 8102 karaf@localhost

Password authentication

Password:

__ __ ____

/ //_/____ __________ _/ __/

/ ,< / __ `/ ___/ __ `/ /_

/ /| |/ /_/ / / / /_/ / __/

/_/ |_|\__,_/_/ \__,_/_/

Apache Karaf (4.2.15)

Hit '<tab>' for a list of available commands

and '[cmd] --help' for help on a specific command.

Hit 'system:shutdown' to shutdown Karaf.

Hit '<ctrl-d>' or type 'logout' to disconnect shell from current session.

karaf@root()> log:set DEBUG org.apache.unomi.schema.impl.SchemaServiceImplYou can then either watch the logs via your preferred logging mechanism (docker logs, log file, …) or simply tail the logs to the terminal you used to enable debug mode.

karaf@root()> log:tail

08:55:28.128 DEBUG [qtp1422628821-128] Schema validation found 2 errors while validating against schema: https://unomi.apache.org/schemas/json/events/view/1-0-0

08:55:28.138 DEBUG [qtp1422628821-128] Validation error: There are unevaluated properties at following paths $.source.properties

08:55:28.140 DEBUG [qtp1422628821-128] Validation error: There are unevaluated properties at following paths $.source.itemId, $.source.itemType, $.source.scope, $.source.properties

08:55:28.142 ERROR [qtp1422628821-128] An event was rejected - switch to DEBUG log level for more informationThe example above shows schema validation failure at the $.source.properties path.

Note that the validation will output one log line for the exact failing path and a log line for its parent,

therefore to find the source of a schema validation issue it’s best to start from the top.

3.1.3. How to read a profile

The simplest way to retrieve profile data for the current profile is to simply send a request to the /cxs/context.json endpoint. However you will need to send a body along with that request. Here’s an example:

Here is an example that will retrieve all the session and profile properties, as well as the profile’s segments and scores

curl -X POST http://localhost:8181/cxs/context.json?sessionId=1234 \

-H "Content-Type: application/json" \

--data-raw '{

"source": {

"itemId":"homepage",

"itemType":"page",

"scope":"example"

},

"requiredProfileProperties":["*"],

"requiredSessionProperties":["*"],

"requireSegments":true,

"requireScores":true

}'The requiredProfileProperties and requiredSessionProperties are properties that take an array of property names

that should be retrieved. In this case we use the wildcard character '*' to say we want to retrieve all the available

properties. The structure of the JSON object that you should send is a JSON-serialized version of the

ContextRequest Java class.

Note that it is also possible to access a profile’s data through the /cxs/profiles/ endpoint but that really should be reserved to administrative purposes. All public accesses should always use the /cxs/context.json endpoint for consistency and security.

3.1.4. How to update a profile from the public internet

Before we get into how to update a profile directly from a request coming from the public internet, we’ll quickly talk first about how NOT to do it, because we often see users using the following anti-patterns.

How NOT to update a profile from the public internet

Please avoid using the /cxs/profile endpoint. This endpoint was initially the only way to update a profile but it has multiple issues:

-

it requires authenticated access. The temptation can be great to use this endpoint because it is simple to access but the risk is that developers might include the credentials to access it in non-secure parts of code such as client-side code. Since there is no difference between this endpoint and any other administration-focused endpoints, attackers could easily re-use stolen credentials to wreak havock on the whole platform.

-

No history of profile modifications is kept: this can be a problem for multiple reasons: you might want to keep an trail of profile modifications, or even a history of profile values in case you want to understand how a profile property was modified.

-

Even when protected using some kind of proxy, potentially the whole profile properties might be modified, including ones that you might not want to be overriden.

Recommended ways to update a profile

Instead you can use the following solutions to update profiles:

-

(Preferred) Use you own custom event(s) to send data you want to be inserted in a profile, and use rules to map the event data to the profile. This is simpler than it sounds, as usually all it requires is setting up a simple rule, defining the corresponding JSON schema and you’re ready to update profiles using events.

-

Use the protected built-in "updateProperties" event. This event is designed to be used for administrative purposes only. Again, prefer the custom events solution because as this is a protected event it will require sending the Unomi key as a request header, and as Unomi only supports a single key for the moment it could be problematic if the key is intercepted. But at least by using an event you will get the benefits of auditing and historical property modification tracing.

Let’s go into more detail about the preferred way to update a profile. Let’s consider the following example of a rule:

curl -X POST http://localhost:8181/cxs/rules \

--user karaf:karaf \

-H "Content-Type: application/json" \

--data-raw '{

"metadata": {

"id": "setContactInfo",

"name": "Copy the received contact info to the current profile",

"description": "Copies the contact info received in a custom event called 'contactInfoSubmitted' to the current profile"

},

"raiseEventOnlyOnceForSession": false,

"condition": {

"type": "eventTypeCondition",

"parameterValues": {

"eventTypeId": "contactInfoSubmitted"

}

},

"actions": [

{

"type": "setPropertyAction",

"parameterValues": {

"setPropertyName": "properties(firstName)",

"setPropertyValue": "eventProperty::properties(firstName)",

"setPropertyStrategy": "alwaysSet"

}

},

{

"type": "setPropertyAction",

"parameterValues": {

"setPropertyName": "properties(lastName)",

"setPropertyValue": "eventProperty::properties(lastName)",

"setPropertyStrategy": "alwaysSet"

}

},

{

"type": "setPropertyAction",

"parameterValues": {

"setPropertyName": "properties(email)",

"setPropertyValue": "eventProperty::properties(email)",

"setPropertyStrategy": "alwaysSet"

}

}

]

}'What this rule does is that it listen for a custom event (events don’t need any registration, you can simply start sending them to Apache Unomi whenever you like) of type 'contactInfoSubmitted' and it will search for properties called 'firstName', 'lastName' and 'email' and copy them over to the profile with corresponding property names. You could of course change any of the property names to find your needs. For example you might want to prefix the profile properties with the source of the event, such as 'mobileApp:firstName'.

Now that our rule is defined, the next step is to create a scope and a JSON Schema corresponding to the event to be submitted.

We will start by creating a scope called "example" scope:

curl --location --request POST 'http://localhost:8181/cxs/scopes' \

-u 'karaf:karaf' \

--header 'Content-Type: application/json' \

--data-raw '{

"itemId": "example",

"itemType": "scope"

}'The next step consist in creating a JSON Schema to validate our event.

curl --location --request POST 'http://localhost:8181/cxs/jsonSchema' \

-u 'karaf:karaf' \

--header 'Content-Type: application/json' \

--data-raw '{

"$id": "https://unomi.apache.org/schemas/json/events/contactInfoSubmitted/1-0-0",

"$schema": "https://json-schema.org/draft/2019-09/schema",

"self": {

"vendor": "org.apache.unomi",

"name": "contactInfoSubmitted",

"format": "jsonschema",

"target": "events",

"version": "1-0-0"

},

"title": "contactInfoSubmittedEvent",

"type": "object",

"allOf": [{ "$ref": "https://unomi.apache.org/schemas/json/event/1-0-0" }],

"properties": {

"source" : {

"$ref" : "https://unomi.apache.org/schemas/json/item/1-0-0"

},

"target" : {

"$ref" : "https://unomi.apache.org/schemas/json/item/1-0-0"

},

"properties": {

"type": "object",

"properties": {

"firstName": {

"type": ["null", "string"]

},

"lastName": {

"type": ["null", "string"]

},

"email": {

"type": ["null", "string"]

}

}

}

},

"unevaluatedProperties": false

}'You can notice the following in the above schema:

-

We are creating a schema of type "events" ("self.target" equals "events")

-

The name of this schema is "contactInfoSubmitted", this MUST match the value of the "eventType" field in the event itself (below)

-

To simplify our schema declaration, we’re referring to an already existing schema (https://unomi.apache.org/schemas/json/item/1-0-0) to validate the "source" and "target" properties. Apache Unomi ships with a set of predefined JSON Schemas, detailed here: https://github.com/apache/unomi/tree/master/extensions/json-schema/services/src/main/resources/META-INF/cxs/schemas.

-

"unevaluatedProperties": falseindicates that the event should be rejected if it contains any additional metadata.

Finally, send the contactInfoSubmitted event using a request similar to this one:

curl -X POST http://localhost:8181/cxs/eventcollector \

-H "Content-Type: application/json" \

--data-raw '{

"sessionId" : "1234",

"events":[

{

"eventType":"contactInfoSubmitted",

"scope": "example",

"source":{

"itemType": "site",

"scope": "example",

"itemId": "mysite"

},

"target":{

"itemType": "form",

"scope": "example",

"itemId": "contactForm"

},

"properties" : {

"firstName": "John",

"lastName": "Doe",

"email": "john.doe@acme.com"

}

}

]

}'The event we just submitted can be retrieved using the following request:

curl -X POST http://localhost:8181/cxs/events/search \

--user karaf:karaf \

-H "Content-Type: application/json" \

--data-raw '{

"offset" : 0,

"limit" : 20,

"condition" : {

"type": "eventPropertyCondition",

"parameterValues" : {

"propertyName" : "properties.firstName",

"comparisonOperator" : "equals",

"propertyValue" : "John"

}

}

}'Troubleshooting common errors

There could be two types of common errors while customizing the above requests: * The schema is invalid * The event is invalid

While first submitting the schema during its creation, Apache Unomi will validate it is syntaxically correct (JSON) but will not perform any further validation. Since the schema will be processed for the first time when events are submitted, errors might be noticeable at that time.

Those errors are usually self-explanatory, such as this one pointing to an incorrect lcoation for the "firstName" keyword:

09:35:56.573 WARN [qtp1421852915-83] Unknown keyword firstName - you should define your own Meta Schema. If the keyword is irrelevant for validation, just use a NonValidationKeywordIf an event is invalid, the logs will contain details about the part of the event that did not validate against the schema. In the example below, an extra property "abcd" was added to the event:

12:27:04.269 DEBUG [qtp1421852915-481] Schema validation found 1 errors while validating against schema: https://unomi.apache.org/schemas/json/events/contactInfoSubmitted/1-0-0

12:27:04.272 DEBUG [qtp1421852915-481] Validation error: There are unevaluated properties at following paths $.properties.abcd

12:27:04.273 ERROR [qtp1421852915-481] An event was rejected - switch to DEBUG log level for more information3.1.5. How to search for profile events

Sometimes you want to retrieve events for a known profile. You will need to provide a query in the body of the request that looks something like this (and documentation is available in the REST API) :

curl -X POST http://localhost:8181/cxs/events/search \

--user karaf:karaf \

-H "Content-Type: application/json" \

--data-raw '{

"offset" : 0,

"limit" : 20,

"condition" : {

"type": "eventPropertyCondition",

"parameterValues" : {

"propertyName" : "profileId",

"comparisonOperator" : "equals",

"propertyValue" : "PROFILE_ID"

}

}

}'where PROFILE_ID is a profile identifier. This will indeed retrieve all the events for a given profile.

3.1.6. How to create a new rule

There are basically two ways to create a new rule :

-

Using the REST API

-

Packaging it as a predefined rule in a plugin

In both cases the JSON structure for the rule will be exactly the same, and in most scenarios it will be more interesting to use the REST API to create and manipulate rules, as they don’t require any development or deployments on the Apache Unomi server.

curl -X POST http://localhost:8181/cxs/rules \

--user karaf:karaf \

-H "Content-Type: application/json" \

--data-raw '{

"metadata": {

"id": "exampleEventCopy",

"name": "Example Copy Event to Profile",

"description": "Copy event properties to profile properties"

},

"condition": {

"type": "eventTypeCondition",

"parameterValues": {

"eventTypeId" : "myEvent"

}

},

"actions": [

{

"parameterValues": {

},

"type": "allEventToProfilePropertiesAction"

}

]

}'The above rule will be executed if the incoming event is of type myEvent and will simply copy all the properties

contained in the event to the current profile.

3.1.7. How to search for profiles

In order to search for profiles you will have to use the /cxs/profiles/search endpoint that requires a Query JSON structure. Here’s an example of a profile search with a Query object:

curl -X POST http://localhost:8181/cxs/profiles/search \

--user karaf:karaf \

-H "Content-Type: application/json" \

--data-raw '{

"text" : "unomi",

"offset" : 0,

"limit" : 10,

"sortby" : "properties.lastName:asc,properties.firstName:desc",

"condition" : {

"type" : "booleanCondition",

"parameterValues" : {

"operator" : "and",

"subConditions" : [

{

"type": "profilePropertyCondition",

"parameterValues": {

"propertyName": "properties.leadAssignedTo",

"comparisonOperator": "exists"

}

},

{

"type": "profilePropertyCondition",

"parameterValues": {

"propertyName": "properties.lastName",

"comparisonOperator": "exists"

}

}

]

}

}

}'In the above example, you search for all the profiles that have the leadAssignedTo and lastName properties and that

have the unomi value anywhere in their profile property values. You are also specifying that you only want 10 results

beginning at offset 0. The results will be also sorted in alphabetical order for the lastName property value, and then

by reverse alphabetical order for the firstName property value.

As you can see, queries can be quite complex. Please remember that the more complex the more resources it will consume on the server and potentially this could affect performance.

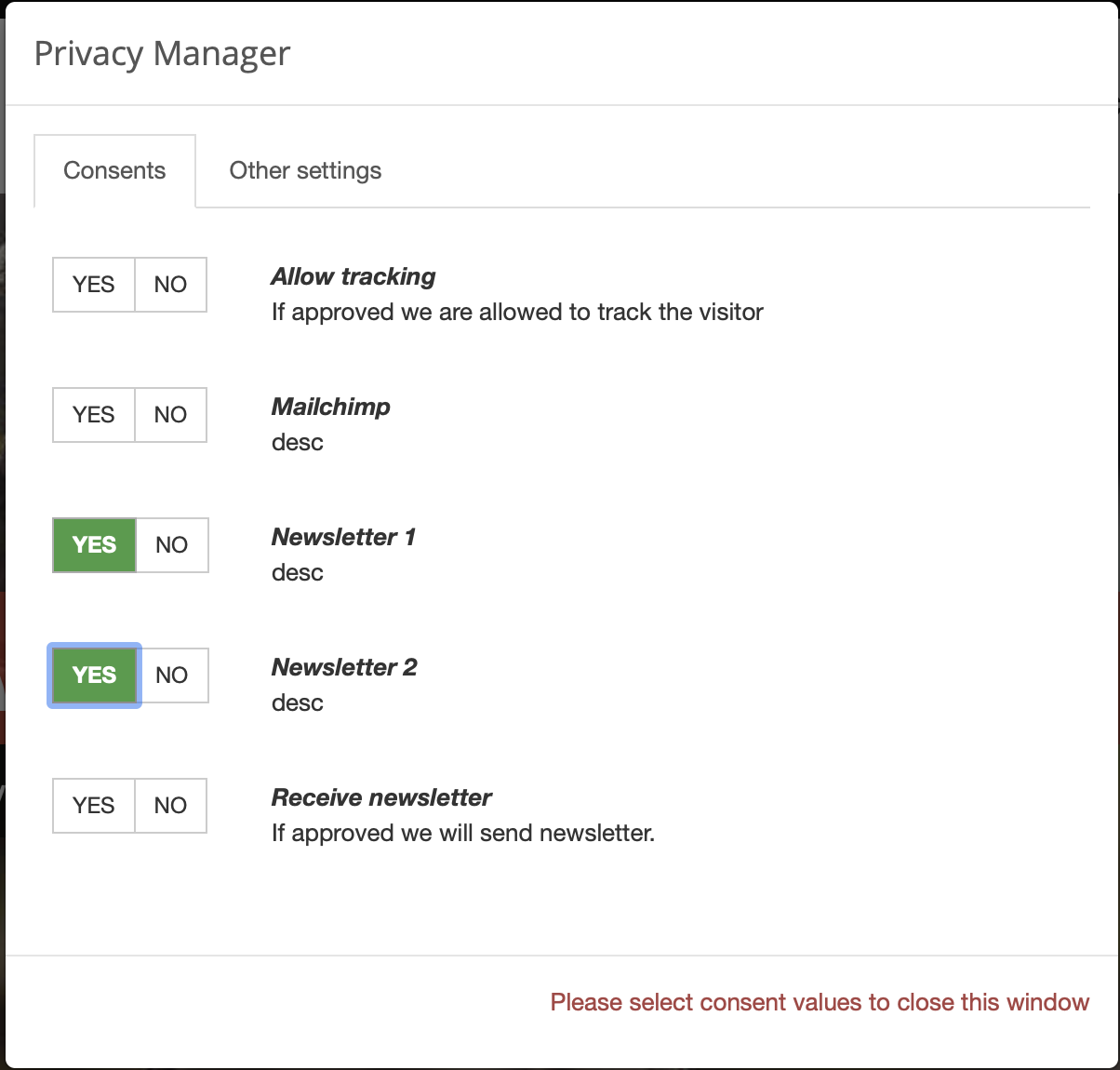

3.1.8. Getting / updating consents

You can find information on how to retrieve or create/update consents in the Consent API section.

3.1.9. How to send a login event to Unomi

Tracking logins must be done carefully with Unomi. A login event is considered a "privileged" event and therefore for not be initiated from the public internet. Ideally user authentication should always be validated by a trusted third- party even if it is a well-known social platform such as Facebook or Twitter. Basically what should NEVER be done:

-

Login to a social platform

-

Call back to the originating page

-

Send a login event to Unomi from the page originating the login in step 1

The problem with this, is that any attacker could simply directly call step 3 without any kind of security. Instead the flow should look something like this:

-

Login to a social platform

-

Call back to a special secured system that performs an server-to-server call to send the login event to Apache Unomi using the Unomi key.

For simplicity reasons, in our login example, the first method is used, but it really should never be done like this in production because of the aforementioned security issues. The second method, although a little more involved, is much preferred.

When sending a login event, you can setup a rule that can check a profile property to see if profiles can be merged on an universal identifier such as an email address.

In our login sample we provide an example of such a rule. You can find it here:

As you can see in this rule, we call an action called :

mergeProfilesOnPropertyAction

with as a parameter value the name of the property on which to perform the merge (the email). What this means is that upon successful login using an email, Unomi will look for other profiles that have the same email and merge them into a single profile. Because of the merge, this should only be done for authenticated profiles, otherwise this could be a security issue since it could be a way to load data from other profiles by merging their data !

3.1.10. What profile aliases are and how to use them

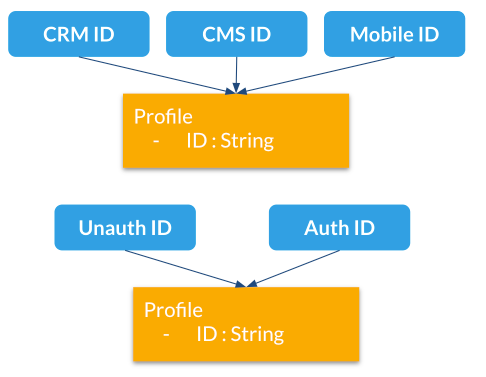

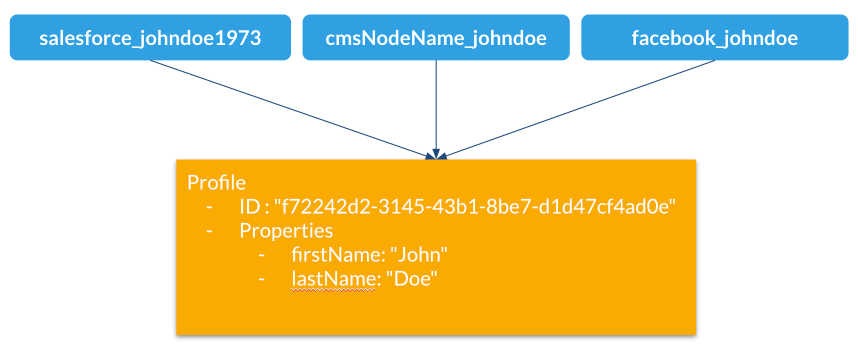

Profile aliases make it possible to reference profiles using multiple identifiers.

The profile alias object basically contains a link between the alias ID and the profile ID. The itemId of a profile alias is the actual alias ID, which the profileID field contains the reference to the aliased profile.

What they are

Profile aliases:

-

Make it possible to lookup profiles by main (Unomi) ID or by any other alias ID

-

Aliases are just IDs stored in a dedicated index

-

A profile may have an unlimited number of aliases attached to it.

How to use them

Here are different use cases for profile aliases:

-

Connect different systems to Unomi such as a CRM, CMS and native mobile app that all have their own iD for a single customer

-

Merging profiles when a visitor is identified

-

Adding new IDs at a later time

Example

Here is an example of multiple external aliases pointing to a single Unomi profile

Interactions with merging

Profile merges have been modified to use aliases starting Unomi 2

Upon merge:

-

Properties are copied to the master profile as before

-

An alias is created for the "master" profile with the ID of the merged profile

-

Merged profiles are now deleted

-

"mergedWith" property is no longer used since we deleted the merged profiles

API

/context.json and /eventcollector will now look up profiles by profile ID or aliases from the same cookie (context-profile-id) or body parameters (profileId)

| Verb | Path | Description |

|---|---|---|

GET |

/cxs/profiles/PROFILE_ID_OR_ALIAS |

Retrieves a profile by ID or Alias ID (useful if an external system wants to get a profile) |

GET |

/cxs/profiles/PROFILE_ID/aliases |

Get all the aliases for a profile |

POST |

/cxs/profiles/PROFILE_ID/aliases/ALIAS_ID |

Add an alias to a profile |

DELETE |

/cxs/profiles/PROFILE_ID/aliases/ALIAS_ID |

Remove an alias from a profile |

3.2. Request examples

3.2.1. Retrieving your first context

You can retrieve a context using curl like this :

curl http://localhost:8181/cxs/context.js?sessionId=1234This will retrieve a JavaScript script that contains a cxs object that contains the context with the current user

profile, segments, scores as well as functions that makes it easier to perform further requests (such as collecting

events using the cxs.collectEvents() function).

3.2.2. Retrieving a context as a JSON object.

If you prefer to retrieve a pure JSON object, you can simply use a request formed like this:

curl http://localhost:8181/cxs/context.json?sessionId=12343.2.3. Accessing profile properties in a context

By default, in order to optimize the amount of data sent over the network, Apache Unomi will not send the content of the profile or session properties. If you need this data, you must send a JSON object to configure the resulting output of the context.js(on) servlet.

Here is an example that will retrieve all the session and profile properties, as well as the profile’s segments and scores

curl -X POST http://localhost:8181/cxs/context.json?sessionId=1234 \

-H "Content-Type: application/json" \

-d @- <<'EOF'

{

"source": {

"itemId":"homepage",

"itemType":"page",

"scope":"example"

},

"requiredProfileProperties":["*"],

"requiredSessionProperties":["*"],

"requireSegments":true,

"requireScores":true

}

EOFThe requiredProfileProperties and requiredSessionProperties are properties that take an array of property names

that should be retrieved. In this case we use the wildcard character '*' to say we want to retrieve all the available

properties. The structure of the JSON object that you should send is a JSON-serialized version of the ContextRequest

Java class.

3.2.4. Sending events using the context servlet

At the same time as you are retrieving the context, you can also directly send events in the ContextRequest object as illustrated in the following example:

curl -X POST http://localhost:8181/cxs/context.json?sessionId=1234 \

-H "Content-Type: application/json" \

-d @- <<'EOF'

{

"source":{

"itemId":"homepage",

"itemType":"page",

"scope":"example"

},

"events":[

{

"eventType":"view",

"scope": "example",

"source":{

"itemType": "site",

"scope":"example",

"itemId": "mysite"

},

"target":{

"itemType":"page",

"scope":"example",

"itemId":"homepage",

"properties":{

"pageInfo":{

"referringURL":"https://apache.org/"

}

}

}

}

]

}

EOFUpon received events, Apache Unomi will execute all the rules that match the current context, and return an updated context. This way of sending events is usually used upon first loading of a page. If you want to send events after the page has finished loading you could either do a second call and get an updating context, or if you don’t need the context and want to send events in a network optimal way you can use the eventcollector servlet (see below).

3.2.5. Sending events using the eventcollector servlet

If you only need to send events without retrieving a context, you should use the eventcollector servlet that is optimized respond quickly and minimize network traffic. Here is an example of using this servlet:

curl -X POST http://localhost:8181/cxs/eventcollector \

-H "Content-Type: application/json" \

-d @- <<'EOF'

{

"sessionId" : "1234",

"events":[

{

"eventType":"view",

"scope": "example",

"source":{

"itemType": "site",

"scope":"example",

"itemId": "mysite"

},

"target":{

"itemType":"page",

"scope":"example",

"itemId":"homepage",

"properties":{

"pageInfo":{

"referringURL":"https://apache.org/"

}

}

}

}

]

}

EOFNote that the eventcollector executes the rules but does not return a context. If is generally used after a page is loaded to send additional events.

3.2.6. Where to go from here

-

You can find more useful Apache Unomi URLs that can be used in the same way as the above examples.

-

Read the Twitter sample documentation that contains a detailed example of how to integrate with Apache Unomi.

4. Configuration

4.1. Centralized configuration

Apache Unomi uses a centralized configuration file that contains both system properties and configuration properties. These settings are then fed to the OSGi and other configuration files using placeholder that look something like this:

contextserver.publicAddress=${org.apache.unomi.cluster.public.address:-http://localhost:8181}

contextserver.internalAddress=${org.apache.unomi.cluster.internal.address:-https://localhost:9443}Default values are stored in a file called $MY_KARAF_HOME/etc/custom.system.properties but you should never modify

this file directly, as an override mechanism is available. Simply create a file called:

unomi.custom.system.properties

and put your own property values in their to override the defaults OR you can use environment variables to also override

the values in the $MY_KARAF_HOME/etc/custom.system.properties. See the next section for more information about that.

4.2. Changing the default configuration using environment variables (i.e. Docker configuration)

You might want to use environment variables to change the default system configuration, especially if you intend to run Apache Unomi inside a Docker container. You can find the list of all the environment variable names in the following file:

If you are using Docker Container, simply pass the environment variables on the docker command line or if you are using Docker Compose you can put the environment variables in the docker-compose.yml file.

If you want to "save" the environment values in a file, you can use the bin/setenv(.bat) to setup the environment

variables you want to use.

4.3. Changing the default configuration using property files

If you want to change the default configuration using property files instead of environment variables, you can perform

any modification you want in the $MY_KARAF_HOME/etc/unomi.custom.system.properties file.

By default this file does not exist and is designed to be a file that will contain only your custom modifications to the default configuration.

For example, if you want to change the HTTP ports that the server is listening on, you will need to create the following lines in the $MY_KARAF_HOME/etc/unomi.custom.system.properties (and create it if you haven’t yet) file:

org.osgi.service.http.port.secure=9443

org.osgi.service.http.port=8181If you change these ports, also make sure you adjust the following settings in the same file :

org.apache.unomi.cluster.public.address=http://localhost:8181

org.apache.unomi.cluster.internal.address=https://localhost:9443If you need to specify an ElasticSearch cluster name, or a host and port that are different than the default, it is recommended to do this BEFORE you start the server for the first time, or you will loose all the data you have stored previously.

You can use the following properties for the ElasticSearch configuration

org.apache.unomi.elasticsearch.cluster.name=contextElasticSearch

# The elasticsearch.adresses may be a comma seperated list of host names and ports such as

# hostA:9200,hostB:9200

# Note: the port number must be repeated for each host.

org.apache.unomi.elasticsearch.addresses=localhost:92004.4. Secured events configuration

Apache Unomi secures some events by default. It comes out of the box with a default configuration that you can adjust

by using the centralized configuration file override in $MY_KARAF_HOME/etc/unomi.custom.system.properties

You can find the default configuration in the following file:

$MY_KARAF_HOME/etc/custom.system.properties

The properties start with the prefix : org.apache.unomi.thirdparty.* and here are the default values :

org.apache.unomi.thirdparty.provider1.key=${env:UNOMI_THIRDPARTY_PROVIDER1_KEY:-670c26d1cc413346c3b2fd9ce65dab41}

org.apache.unomi.thirdparty.provider1.ipAddresses=${env:UNOMI_THIRDPARTY_PROVIDER1_IPADDRESSES:-127.0.0.1,::1}

org.apache.unomi.thirdparty.provider1.allowedEvents=${env:UNOMI_THIRDPARTY_PROVIDER1_ALLOWEDEVENTS:-login,updateProperties}

The events set in allowedEvents will be secured and will only be accepted if the call comes from the specified IP address, and if the secret-key is passed in the X-Unomi-Peer HTTP request header. The "env:" part means that it will attempt to read an environment variable by that name, and if it’s not found it will default to the value after the ":-" marker.

It is now also possible to use IP address ranges instead of having to list all valid IP addresses for event sources. This is very useful when working in cluster deployments where servers may be added or removed dynamically. In order to support this Apache Unomi uses a library called IPAddress that supports IP ranges and subnets. Here is an example of how to setup a range:

org.apache.unomi.thirdparty.provider1.ipAddresses=${env:UNOMI_THIRDPARTY_PROVIDER1_IPADDRESSES:-192.168.1.1-100,::1}

The above configuration will allow a range of IP addresses between 192.168.1.1 and 192.168.1.100 as well as the IPv6 loopback.

Here’s another example using the subnet format:

org.apache.unomi.thirdparty.provider1.ipAddresses=${env:UNOMI_THIRDPARTY_PROVIDER1_IPADDRESSES:-1.2.0.0/16,::1}

The above configuration will allow all addresses starting with 1.2 as well as the IPv6 loopback address.

Wildcards may also be used:

org.apache.unomi.thirdparty.provider1.ipAddresses=${env:UNOMI_THIRDPARTY_PROVIDER1_IPADDRESSES:-1.2.*.*,::1}

The above configuration is exactly the same as the previous one.

More advanced ranges and subnets can be used as well, please refer to the IPAddress library documentation for details on how to format them.

If you want to add another provider you will need to add them manually in the following file (and make sure you maintain the changes when upgrading) :

$MY_KARAF_HOME/etc/org.apache.unomi.thirdparty.cfg

Usually, login events, which operate on profiles and do merge on protected properties, must be secured. For each trusted third party server, you need to add these 3 lines :

thirdparty.provider1.key=secret-key

thirdparty.provider1.ipAddresses=127.0.0.1,::1

thirdparty.provider1.allowedEvents=login,updateProperties4.5. Installing the MaxMind GeoIPLite2 IP lookup database

Apache Unomi requires an IP database in order to resolve IP addresses to user location. The GeoLite2 database can be downloaded from MaxMind here : http://dev.maxmind.com/geoip/geoip2/geolite2/

Simply download the GeoLite2-City.mmdb file into the "etc" directory.

4.6. Installing Geonames database

Apache Unomi includes a geocoding service based on the geonames database ( http://www.geonames.org/ ). It can be used to create conditions on countries or cities.

In order to use it, you need to install the Geonames database into . Get the "allCountries.zip" database from here : http://download.geonames.org/export/dump/

Download it and put it in the "etc" directory, without unzipping it.

Edit $MY_KARAF_HOME/etc/unomi.custom.system.properties and set org.apache.unomi.geonames.forceImport to true,

import should start right away.

Otherwise, import should start at the next startup. Import runs in background, but can take about 15 minutes.

At the end, you should have about 4 million entries in the geonames index.

4.7. REST API Security

The Apache Unomi Context Server REST API is protected using JAAS authentication and using Basic or Digest HTTP auth. By default, the login/password for the REST API full administrative access is "karaf/karaf".

The generated package is also configured with a default SSL certificate. You can change it by following these steps :

Replace the existing keystore in $MY_KARAF_HOME/etc/keystore by your own certificate :

Update the keystore and certificate password in $MY_KARAF_HOME/etc/unomi.custom.system.properties file :

org.ops4j.pax.web.ssl.keystore=${env:UNOMI_SSL_KEYSTORE:-${karaf.etc}/keystore}

org.ops4j.pax.web.ssl.password=${env:UNOMI_SSL_PASSWORD:-changeme}

org.ops4j.pax.web.ssl.keypassword=${env:UNOMI_SSL_KEYPASSWORD:-changeme}You should now have SSL setup on Karaf with your certificate, and you can test it by trying to access it on port 9443.

Changing the default Karaf password can be done by modifying the org.apache.unomi.security.root.password in the

$MY_KARAF_HOME/etc/unomi.custom.system.properties file

4.8. Scripting security

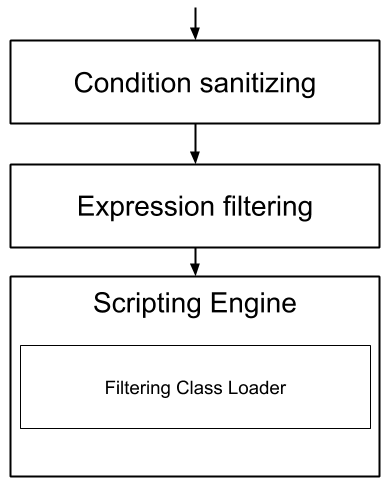

4.8.1. Multi-layer scripting filtering system

The scripting security system is multi-layered.

For requests coming in through the /cxs/context.json endpoint, the following flow is used to secure incoming requests:

Conditions submitted through the context.json public endpoint are first sanitized, meaning that any scripting directly injected is removed. However, as conditions can use sub conditions that include scripting, only the first directly injected layer of scripts are removed.

The second layer is the expression filtering system, that uses an allow-listing mechanism to only accept pre-vetted expressions (through configuration and deployment on the server side). Any unrecognized expression will not be accepted.

Finally, once the script starts executing in the scripting engine, a filtering class loader will only let the script access classes that have been allowed.

This multi-layered approach makes it possible to retain a high level of security even if one layer is poorly configured or abused.

For requests coming in through the secure APIs such as rules, only the condition sanitizing step is skipped, otherwise the rest of the filtering system is the same.

4.8.2. Scripts and expressions

Apache Unomi allows using different types of expressions in the following subsystems:

-

context.json filters and personalization queries

-

rule conditions and actions parameters

Apache Unomi uses two integrated scripting languages to provide this functionality: OGNL and MVEL. OGNL is deprecated and is now disabled by default since 1.5.2 as it is little used (and replaced by better performing hardcoded property lookups). MVEL is more commonly used in rule actions as in the following example:

{

"metadata": {

"id": "_ajhg9u2s5_sessionAssigned",

"name": "Session assigned to a profile",

"description": "Update profile visit information",

"readOnly":true

},

"condition": {

"type": "booleanCondition",

"parameterValues": {

"subConditions":[

{

"type": "eventTypeCondition",

"parameterValues": {

"eventTypeId": "sessionCreated"

}

},

{

"type": "eventTypeCondition",

"parameterValues": {

"eventTypeId": "sessionReassigned"

}

}

],

"operator":"or"

}

},

"actions": [

{

"parameterValues": {

"setPropertyName": "properties.previousVisit",

"setPropertyValue": "profileProperty::lastVisit",

"storeInSession": false

},

"type": "setPropertyAction"

},

{

"parameterValues": {

"setPropertyName": "properties.lastVisit",

"setPropertyValue": "now",

"storeInSession": false

},

"type": "setPropertyAction"

},

{

"parameterValues": {

"setPropertyName": "properties.nbOfVisits",

"setPropertyValue": "script::profile.properties.?nbOfVisits != null ? (profile.properties.nbOfVisits + 1) : 1",

"storeInSession": false

},

"type": "setPropertyAction"

}

]

}As we see in the above example, we use an MVEL script with the setPropertyAction to set a property value. Starting with version 1.5.2, any expression use in rules MUST be allow-listed.

OGNL was previously used wherever a parameter could be used, but MVEL could only be used with a “script::” prefix. Starting with version 1.5.2 OGNL will no longer be allowed and is replaced by a compatible “hardcoded” property lookup system, while MVEL requires allow-listing the scripts that are to be used.

By default, Apache Unomi comes with some built-in allowed expressions that cover all the internal uses cases.

Default allowed MVEL expressions (from https://github.com/apache/unomi/blob/unomi-1.5.x/plugins/baseplugin/src/main/resources/META-INF/cxs/expressions/mvel.json) :

[

"\\Q'systemProperties.goals.'+goalId+'TargetReached'\\E",

"\\Q'now-'+since+'d'\\E",

"\\Q'scores.'+scoringPlanId\\E",

"\\QminimumDuration*1000\\E",

"\\QmaximumDuration*1000\\E",

"\\Qprofile.properties.?nbOfVisits != null ? (profile.properties.nbOfVisits + 1) : 1\\E",

"\\Qsession != null ? session.size + 1 : 0\\E",

"\\Q'properties.optimizationTest_'+event.target.itemId\\E",

"\\Qevent.target.properties.variantId\\E",

"\\Qprofile.properties.?systemProperties.goals.\\E[\\w\\_]*\\QReached != null ? (profile.properties.systemProperties.goals.\\E[\\w\\_]*\\QReached) : 'now'\\E",

"\\Qprofile.properties.?systemProperties.campaigns.\\E[\\w\\_]*\\QEngaged != null ? (profile.properties.systemProperties.campaigns.\\E[\\w\\_]*\\QEngaged) : 'now'\\E"

]If you require or are already using custom expressions, you should add a plugin to Apache Unomi to allow for this. The choice of a plugin was to make sure only system administrators and solution developers could provide such a list, avoiding the possibility to provide it through an API call or another security sensitive deployment mechanism.

There is another way of allow-listing expressions through configuration, see the “scripting configuration parameters” section below.

Procedure to add allowed expressions:

-

Create a new Apache Unomi plugin project.

-

Create a JSON file in src/main/resources/META-INF/cxs/expressions/mvel.json with an array of regular expressions that will contain the allowed expressions.

-

Build the project and deploy it to Apache Unomi

Warning: Do not make regular expressions too general. They should actually be as specific as possible to avoid potential injection of malicious code.

4.8.3. Scripting expression filtering configuration parameters

Alongside with the allow-listing technology, there are new configuration parameters to control the security of the scripting engines:

# These parameters control the list of classes that are allowed or forbidden when executing expressions.

org.apache.unomi.scripting.allow=${env:UNOMI_ALLOW_SCRIPTING_CLASSES:-org.apache.unomi.api.Event,org.apache.unomi.api.Profile,org.apache.unomi.api.Session,org.apache.unomi.api.Item,org.apache.unomi.api.CustomItem,ognl.*,java.lang.Object,java.util.Map,java.util.HashMap,java.lang.Integer,org.mvel2.*}

org.apache.unomi.scripting.forbid=${env:UNOMI_FORBID_SCRIPTING_CLASSES:-}

# This parameter controls the whole expression filtering system. It is not recommended to turn it off. The main reason to turn it off would be to check if it is interfering with something, but it should always be active in production.

org.apache.unomi.scripting.filter.activated=${env:UNOMI_SCRIPTING_FILTER_ACTIVATED:-true}

# The following parameters control the filtering using regular expressions for each scripting sub-system.

# The "collections" parameter tells the expression filtering system which configurations to expect. By default only MVEL and/or OGNL are accepted values, but in the future these might be replaced by new scripting sub-systems.

org.apache.unomi.scripting.filter.collections=${env:UNOMI_SCRIPTING_FILTER_COLLECTIONS:-mvel,ognl}

# For each scripting sub-system, there is an allow and a forbid property that reference a .json files,

# you can either edit this files or reference your own file directly in the following config.

# Note: You can add new expressions to the "allow" file, although it is better to add them inside any plugins you may be adding.

# This configuration is only designed to compensate for the cases where something was not properly designed or to deal with compatibility issues.

# Just be VERY careful to make your patterns AS SPECIFIC AS POSSIBLE in order to avoid introducing a way to abuse the expression filtering.

# Note: It is NOT recommended to change the built-in "forbid" value unless you are having issues with its value.

# Note: mvel-allow.json contains an empty array: [], this mean nothing is allowed, so far.

# If you want to allow all expression, just remove the property org.apache.unomi.scripting.filter.mvel.allow, but this is not recommended

# It's better to list your expressions, and provide them in the mvel-allow.json file

# example: ["\\Qsession.size + 1\\E"]

org.apache.unomi.scripting.filter.mvel.allow=${env:UNOMI_SCRIPTING_FILTER_MVEL_ALLOW:-${karaf.etc}/mvel-allow.json}

org.apache.unomi.scripting.filter.mvel.forbid=${env:UNOMI_SCRIPTING_FILTER_MVEL_FORBID:-${karaf.etc}/mvel-forbid.json}

org.apache.unomi.scripting.filter.ognl.allow=${env:UNOMI_SCRIPTING_FILTER_OGNL_ALLOW:-${karaf.etc}/ognl-allow.json}

org.apache.unomi.scripting.filter.ognl.forbid=${env:UNOMI_SCRIPTING_FILTER_OGNL_FORBID:-${karaf.etc}/ognl-forbid.json}

# This parameter controls whether OGNL scripting is allowed in expressions. Because of security reasons it is deactivated by default. If you run into compatibility issues you could reactivate it but it is at your own risk.

org.apache.unomi.security.properties.useOGNLScripting=${env:UNOMI_SCRIPTING_USE_OGNL:-false}

# This parameter controls the condition sanitizing done on the ContextServlet (/cxs/context.json). If will remove any expressions that start with "script::". It is not recommended to change this value, unless you run into compatibility issues.

org.apache.unomi.security.personalization.sanitizeConditions=${env:UNOMI_SECURITY_SANITIZEPERSONALIZATIONCONDITIONS:-true}4.8.4. Groovy Actions

Groovy actions offer the ability to define a set of actions and action types (aka action descriptors) purely from Groovy scripts defined at runtime.

Initially submitted to Unomi through a purpose-built REST API endpoint, Groovy actions are then stored in Elasticsearch. When an event matches a rule configured to execute an action, the corresponding action is fetched from Elasticsearch and executed.

Anatomy of a Groovy Action

To be valid, a Groovy action must follow a particular convention which is divided in two parts:

-

An annotation used to define the associated action type

-

The function to be executed

Placed right before the function, the “@Action” annotation contains a set of parameter detailing how the action should be triggered.

| Field name | Type | Required | Description |

|---|---|---|---|

id |

String |

YES |

Id of the action |

actionExecutor |

String |

YES |

Action executor contains the name of the script to call for the action type and must be prefixed with “groovy:”. The prefix indicates to Unomi which dispatcher to use when processing the action. |

name |

String |

Action name |

|

hidden |

Boolean |

Define if the action is hidden or not. It is usually used to hide objects in a UI. |

|

parameters |

List<Parameter> |

The parameters of the actions, also defined by annotations |

|

systemTags |

List<String> |

A (reserved) list of tags for the associated object. This is usually populated through JSON descriptors and is not meant to be modified by end users. These tags may include values that help classify associated objects. |

The function contained within the Groovy Action must be called execute() and its last instruction must be an integer.

This integer serves as an indication whether the values of the session and profile should be persisted. In general, the codes used are defined in the EventService interface.

Each groovy actions extends by default a Base script defined here

REST API

Actions can be deployed/updated/deleted via the dedicated /cxs/groovyActions rest endpoint.

Deploy/update an Action:

curl -X POST 'http://localhost:8181/cxs/groovyActions' \

--user karaf:karaf \

--form 'file=@"<file location>"'A Groovy Action can be updated by submitting another Action with the same id.

Delete an Action:

curl -X DELETE 'http://localhost:8181/cxs/groovyActions/<Action id>' \

--user karaf:karafNote that when a groovy action is deleted by the API, the action type associated with this action will also be deleted.

Hello World!

In this short example, we’re going to create a Groovy Action that will be adding “Hello world!” to the logs whenever a new view event is triggered.

The first step consists in creating the groovy script on your filesystem, start by creating the file hello-world.groovy:

@Action(id = "helloWorldGroovyAction",

actionExecutor = "groovy:helloWorldAction",

parameters = [@Parameter(id = "location", type = "string", multivalued = false)])

def execute() {

logger.info("Hello {}", action.getParameterValues().get("location"))

EventService.NO_CHANGE

}As the last instruction of the script is EventService.NO_CHANGE, data will not be persisted.

Once the action has been created you need to submit it to Unomi (from the same folder as hello-world.groovy).

curl -X POST 'http://localhost:8181/cxs/groovyActions' \

--user karaf:karaf \

--form 'file=@hello-world.groovy'Finally, register a rule to trigger execution of the groovy action:

curl -X POST 'http://localhost:8181/cxs/rules' \

--user karaf:karaf \

--header 'Content-Type: application/json' \

--data-raw '{

"metadata": {

"id": "scriptGroovyActionRule",

"name": "Test Groovy Action Rule",

"description": "A sample rule to test Groovy actions"

},

"condition": {

"type": "eventTypeCondition",

"parameterValues": {

"eventTypeId": "view"

}

},

"actions": [

{

"parameterValues": {

"location": "world!"

},

"type": "helloWorldGroovyAction"

}

]

}'Note that this rule contains a “location” parameter, with the value “world!”, which is then used in the log message triggered by the action.

You can now use unomi to trigger a “view” event and see the corresponding message in the Unomi logs.

Once you’re done with the Hello World! action, it can be deleted using the following command:

curl -X DELETE 'http://localhost:8181/cxs/groovyActions/helloWorldGroovyAction' \

--user karaf:karafAnd the corresponding rule can be deleted using the following command:

curl -X DELETE 'http://localhost:8181/cxs/rules/scriptGroovyActionRule' \

--user karaf:karafInject an OSGI service in a groovy script

It’s possible to use the services provided by unomi directly in the groovy actions.

In the following example, we are going to create a groovy action that displays the number of existing profiles by using the profile service provided by unomi.

import org.osgi.framework.Bundle

import org.osgi.framework.BundleContext

import org.osgi.framework.FrameworkUtil

import org.apache.unomi.groovy.actions.GroovyActionDispatcher

import org.osgi.framework.ServiceReference

import org.slf4j.Logger

import org.slf4j.LoggerFactory

final Logger logger = LoggerFactory.getLogger(GroovyActionDispatcher.class.getName());

@Action(id = "displayNumberOfProfilesAction", actionExecutor = "groovy:DisplayNumberOfProfilesAction", description = "Display the number of existing profiles")

def execute() {

// Use OSGI function to get the bundleContext

Bundle bundle = FrameworkUtil.getBundle(GroovyActionDispatcher.class);

BundleContext context = bundle.getBundleContext();

// Get the service reference

ServiceReference<ProfileService> serviceReference = context.getServiceReference(ProfileService.class);

// Get the service you are looking for

ProfileService profileService = context.getService(serviceReference);

// Example of displaying the number of profile

logger.info("Display profile count")

logger.info(profileService.getAllProfilesCount().toString())

return EventService.NO_CHANGE

}

Known limitation

Only the services accessible by the class loader of the GroovyActionDispatcher class can be used in the groovy actions. That includes the services in the following packages:

org.apache.unomi.api.actions org.apache.unomi.api.services org.apache.unomi.api org.apache.unomi.groovy.actions org.apache.unomi.groovy.actions.annotations org.apache.unomi.groovy.actions.services org.apache.unomi.metrics org.apache.unomi.persistence.spi org.apache.unomi.services.actions;version

4.8.5. Scripting roadmap

Scripting will probably undergo major changes in future versions of Apache Unomi, with the likely retirement of MVEL in favor of Groovy Actions detailed above.

These changes will not happen on maintenance versions of Apache Unomi, only in the next major version. Maintenance versions will of course maintain compatibility with existing scripting solutions.

4.9. Automatic profile merging

Apache Unomi is capable of merging profiles based on a common property value. In order to use this, you must add the MergeProfileOnPropertyAction to a rule (such as a login rule for example), and configure it with the name of the property that will be used to identify the profiles to be merged. An example could be the "email" property, meaning that if two (or more) profiles are found to have the same value for the "email" property they will be merged by this action.

Upon merge, the old profiles are marked with a "mergedWith" property that will be used on next profile access to delete the original profile and replace it with the merged profile (aka "master" profile). Once this is done, all cookie tracking will use the merged profile.

To test, simply configure the action in the "login" or "facebookLogin" rules and set it up on the "email" property. Upon sending one of the events, all matching profiles will be merged.

4.10. Securing a production environment

Before going live with a project, you should absolutely read the following section that will help you setup a proper secure environment for running your context server.

Step 1: Install and configure a firewall

You should setup a firewall around your cluster of context servers and/or Elasticsearch nodes. If you have an application-level firewall you should only allow the following connections open to the whole world :

All other ports should not be accessible to the world.

For your Apache Unomi client applications (such as the Jahia CMS), you will need to make the following ports accessible :

8181 (Context Server HTTP port)

9443 (Context Server HTTPS port)The Apache Unomi actually requires HTTP Basic Auth for access to the Context Server administration REST API, so it is highly recommended that you design your client applications to use the HTTPS port for accessing the REST API.

The user accounts to access the REST API are actually routed through Karaf’s JAAS support, which you may find the documentation for here :

The default username/password is

karaf/karafYou should really change this default username/password as soon as possible. Changing the default Karaf password can be

done by modifying the org.apache.unomi.security.root.password in the $MY_KARAF_HOME/etc/unomi.custom.system.properties file

Or if you want to also change the user name you could modify the following file :

$MY_KARAF_HOME/etc/users.properties

But you will also need to change the following property in the $MY_KARAF_HOME/etc/unomi.custom.system.properties :

karaf.local.user = karaf

For your context servers, and for any standalone Elasticsearch nodes you will need to open the following ports for proper node-to-node communication : 9200 (Elasticsearch REST API), 9300 (Elasticsearch TCP transport)

Of course any ports listed here are the default ports configured in each server, you may adjust them if needed.

Step 2 : Follow industry recommended best practices for securing Elasticsearch

You may find more valuable recommendations here :

Step 4 : Setup a proxy in front of the context server

As an alternative to an application-level firewall, you could also route all traffic to the context server through a proxy, and use it to filter any communication.

4.11. Integrating with an Apache HTTP web server

If you want to setup an Apache HTTP web server in from of Apache Unomi, here is an example configuration using mod_proxy.

In your Unomi package directory, in $MY_KARAF_HOME/etc/unomi.custom.system.properties setup the public address for

the hostname unomi.apache.org:

org.apache.unomi.cluster.public.address=https://unomi.apache.org/ org.apache.unomi.cluster.internal.address=http://192.168.1.1:8181